Imagine the worst programmer possible. Here's how he writes code:

- Open up the source in an editor and hits some keys at random.

- Try to compile.

- If it doesn't compile, undo the change and try again.

- If it does compile, send it out to QA.

- If QA doesn't like it, throw away the change.

- Rinse, Lather, Repeat.

Now here's what I want you to see, to really wrap your mind around: this guy designed the human brain.

It took him a few billion years, but he did it.

Given the basic mechanism of evolution, there is no reason whatsoever to believe that our minds are elegant. We're better off seeing it as the ultimate legacy system, a massive layering of egregious hacks and duplicate code.

The human mind is not well organized. We can look for rules, but we should expect exceptions. We can't prove anything about such a messy system, but we can proceed empirically.

The reason I'm going off about brains is that I'm about to talk about problem solving in an abstract way, as if it were a simple optimization problem. I have to! Whenever we talk about the human mind, there are so many details that when we try to be thorough, we just end up being obscure. So let's just agree to speak concisely about a complex topic, and to remember "but of course there are obvious exceptions."

Productivity

By "productive problem solving," I mean solving reasonably difficult problems in a reasonable amount of time. I don't mean solving problems super quickly, since that relies on flashes of insight, which is unreliable. And I don't mean solving truly difficult and interesting problems, which is an advanced subject. I mean solving problems with time scales of hours or days; problems difficult enough that the solution - or the method - isn't obvious, but problems that we can look at and be certain of solving given enough time.

You know. Like work.

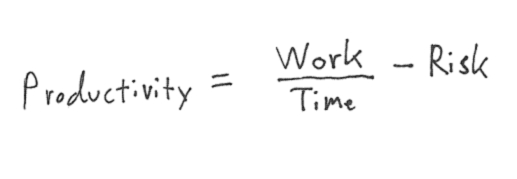

Let's define productivity as decreasing the solution time while minimizing risk.

Now that we have a definition for productivity, What can be done to be optimize it? I'm going to focus on one powerful and very general solution: divide and conquer.

Divide and Conquer

Stuck on a problem that seems too complex to approach? Divide it into smaller problems, solve those, and integrate the solutions. I'm not talking about quicksort here; Such algorithms do very well by dividing a problem into multiple smaller problems of the same form. When humans divide problems, they divide them into to dissimilar problems. In Zen and the Art of Motorcycle Maintenance this is called Phaedrus's knife:

Phædrus was a master with this knife, and used it with dexterity and a sense of power. With a single stroke of analytic thought he split the whole world into parts of his own choosing, split the parts and split the fragments of the parts, finer and finer and finer until he had reduced it to what he wanted it to be.

The knife is about finding ways to focus on the different aspects of a complex system serially, one at a time. We do this all the time, in daily life and at work. We can only hold about seven objects in working memory, so of course we have to chop things up before they'll fit.

Let's see if Henry Ford's assembly line can make things clearer. Instead of dividing by work: "You build those 5 cars and I'll build these 5 cars," Ford divided by role: "You build ten engines, and I'll build ten frames." This was a good cut because each sub-problem is simpler than the original problem. Our brains don't handle complexity very well, so simpler also means faster and easier.

The Ford example actually understates my case. In manufacturing, simplicity makes a relatively small contribution towards productivity: at the end of the day, we still have do a lot of real work. But in programming, simplicity is the whole thing. Instead of a 10 percent, we can expect to see 50 to 100 percent gains.

The Formula

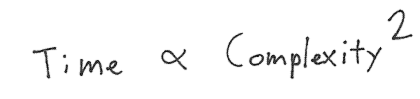

Why do I think that? It's because the difficulty of a problem, measured in the time it takes to solve it, is non-linear in complexity:

I just pulled the quadratic exponent out of a hat, but as a heuristic it's reasonable. I happen to think the exponent is somewhere between 1 and 2, but prefer to use something easy to compute with. This formula implies that if I could get a really clean cut and divide a problem into two pieces of half complexity each, I'd reduce the total time by a factor of two! And if I could cleanly cut each of those problems, I'd reduce the total time by a factor of four...

But obviously this can't go on forever. There are at least two limiting factors:

- A clean cut's pretty hard. Typically, both sub-problems will be more than half as complex as original.

- Making any kind of cut at all takes time, so there's some overhead.

Cutting Diamonds

In fact, there's a lot of overhead to making a good cut. Hours and days can slip by while we ponder design patterns and architectures and development process. It's a bit like cutting a diamond; it takes a lot of skill and concentration to make a cut that adds value to it.

And not all cuts are good: the seven-layer OSI networking model is a perfect example of a cut that just didn't work. Nice try though, guys! So obviously there are risks involved.

But if we get it right... oh man. Design pattern enthusiasts like to claim that patterns produce more maintainable code with weaker coupling. Which means that programmers can replace the components easily, which means they will, which means that if you create a well designed system, sooner or later every bit of it is going to be replaced. Even after every line of code has been replaced, there's your pure, elegant cut straight down the middle. Beautiful.

For example: the distinction between a parser and a lexer. This is unarguably a brilliant cut. I can test my formula against this example: How much longer would it take to write a full parser as a single module, versus writing the tokenizer and parser separately? Since this is an example of a "clean cut", my formula would say about a factor of two. You can judge for yourself if that's accurate; I think it's about right.

Remember Productivity?

Wait a minute. What am I going on about? Brilliant cuts that re-shape the world? What's that got to do with productivity? In software development, a cut generally goes by the name "design." The point of my diamond cutting analogy was that the benefits of a good design last forever. On a typical project, that means they accrue over the life of the project. The larger the project, the larger the benefit.

But there's a limit.

The model doesn't imply that we should dissect a problem until there's nothing left. It implies that we should carefully divide it into a few sub-problems, and then get to work. Beyond a certain point, there's no point. So a couple of practical corollaries:

- Favor "heavy" classes. A class with a dozen lines of code is suspicious. A dozen classes each with a dozen lines of code is very suspicious.

- Don't over design the "inside" of a module. If the module is cohesive and presents a clean interface, the design of the internals isn't that important; focus on other aspects of quality instead.

- Nest. If we can afford three hours of design, spend an hour to cut the problem in half, and then cut each half in half.

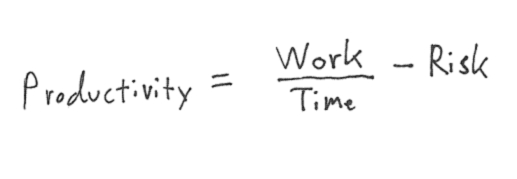

Oh yes, I almost forgot: there's another aspect to productivity, isn't there?

Risk. Always remember that design is risky. We can't guarantee we'll reduce the problem time much, so even if we're decreasing the time required to complete the project by doing more design, we're hurting productivity by increasing risk. The exact cost depends on the project and circumstances, but it's something to keep in mind.

I actually use my formula, including the arbitrary exponent of two, to help me make estimates on projects. In my short career I've made mistakes both ways, over-designing and under-designing, and I feel it's a happy medium. What this heuristic lacks in accuracy it makes up for by being simple enough for my poor little brain to actually use.

- Oran Looney May 2nd 2007

Thanks for reading. This blog is in "archive" mode and comments and RSS feed are disabled. We appologize for the inconvenience.